From Kubernetes ingress controller to Istio through Gateway API (a very loooong journey)

Applications networking is becoming more and more complex every single day but thanks to tools like Istio we can control it.

In this article I'd like to talk (with personal thoughts) about my deep fast journey from Kubernetes Ingress Controller to Istio and why you should consider this transition too. I will also discuss about the new (right now at v0.5.1) Kubernetes Gateway API which is an open source project managed by the SIG-NETWORK community.

Reading the above words, it seems that I strongly invite everyone to use Istio

.... absolutely NO!

Like every tech assets, before using them, first of all we need to analyze at 360° the specific problem and than choose the right solution, for example Istio.

Kubernetes Ingress Controller

Kubernetes Ingress Controller was a nice first step to the decoupling routing logic (Ingress resource) from the real engine (for example Nginx).

👍 Despite classic reverse proxy installation, Kubernetes Ingress Controller allowed to:

- Write reverse proxy agnostic HTTP rules

For example Apache reverse proxy has an xml like way to configure it, Nginx has a completely different way, HAProxy has his own way, etc

So using Kubernetes Ingress Controller you started being free from specific reverse proxy language so theoretically you are allowed to switch from a reverse proxy engine (like Nginx) to an other (like HAProxy) without hurting yourself. - Live reload

On Kubernetes Ingress Controller reverse proxy usually has two components (two containers inside the same pod): ingress-controller and reverse-proxy.

The first one acts as watcher to routing rules (K8S Ingress resource), transform them for the specific reverse proxy brain and than (if necessary based on the technology) reload reverse-proxy container.

👎 This initially seemed very fantastic but when you starts using it on at least little/medium production projects you can easily see its limitations that could really impact your (or your company) business:

- Limited to HTTP protocol

By design Kubernetes Ingress Controller simply allows to build up HTTP rules so if you need custom TPC/UDP rules you must use Custom Resource Definition (CRD) provided from the reverse proxy technology.

The reverse proxy lock-in started to be very near. - No "Advanced" routing rules available

It's usually necessary to do some kind of rewrite url before sending traffic to the upstream. Also in that case you must read the reverse proxy manual in order to use a custom Ingress resource annotation or (as for TCP/UDP) a Custom Resource Definition (CRD) provided from the reverse proxy technology.

You officially locked-in on your chosen reverse proxy technology and a reverse proxy provider change will absolutely hurt yourself.

Think about having to maintain 2/3 different reverse proxy technologies which one have its own CRD definitions in order to do all basic/advanced routing rules. This is absolutely out of control and not easy to maintain.

From these limitation, Kubernetes API Gateway from the SIG-NETWORK community started growing itself.

Kubernetes API Gateway

I would say that Kubernetes API Gateway is the right evolution of Ingress resource and I strongly believe on it.

In the absence of Kubernetes API Gateway, we inevitably fall back on specific Ingress controller Custom Resource Definitions (CRDs). The main missing feature of K8s Ingress resource, was tcp ingress route because of course through Ingress resource you are able to define only HTTP routes 😥.

So we started to be full of heterogeneous CRDs yaml, for example in order to expose TCP services using Traefik (❤️) it's needed to define something like

apiVersion: traefik.containo.us/v1alpha1

kind: IngressRouteTCP

metadata:

name: ingressroutetcpfoo

spec:

entryPoints: # [1]

- footcp

routes: # [2]

- match: HostSNI(`*`) # [3]

services: # [4]

- name: foo # [5]

port: 8080 # [6]

weight: 10 # [7]

terminationDelay: 400 # [8]

...

And because cluster multitenant it's almost the normality because of maintainability, cost saving.. This becomes unmanageable 🤯🤯🤯

🎆 Finally, thanks to the new Kubernetes API Gateway this is almost an old nightmare.

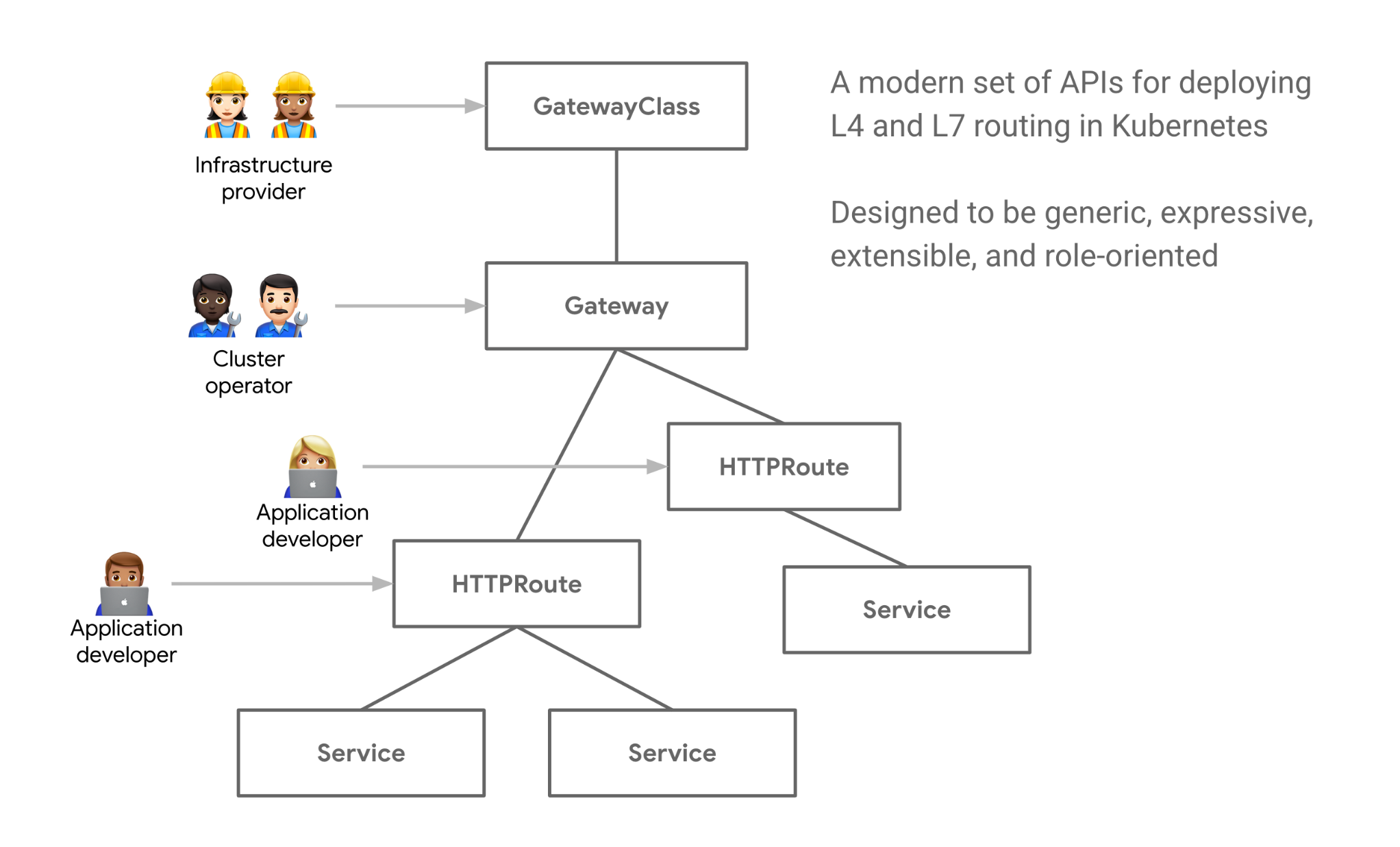

An other nice feature from Kubernetes API Gateway is absolutely the role-oriented architecture. Whether it’s roads, power, data centers, or Kubernetes clusters, infrastructure is built to be shared. Compared to "old" Ingress resource, you can finally give developers the power to define their routes and nothing else. For example it's responsibility of Cluster operator to define hosts (myservice.example.com) and their certificates, on the other side is responsibility of Application developer to define and maintainer his routing (myservice.example.com/api/).

It's all about responsibility.

Not all that glitters is gold (or nearly).. unfortunately:

- Kubernetes API Gateway is still younger (v0.5.1)

- Istio superseded it adding beside the concept of API Gateway also the service mesh implementation 💣💣💣

Istio

Just to clarify, Istio (developed by Google together with other big tech companies) is only one of the projects that implements the service mesh technology. From the markets we can also find:

Personally, the first time that I heard service mesh inside Kubernetes I immediately thought to a complex overlay network so for this reason I didn't enter inside that awesome world until last year...

🙏🙏🙏 But Istio clarified mine (our?) overthinking problem, from official Readme:

Note: The service mesh is not an overlay network. It simplifies and enhances how microservices in an application talk to each other over the network provided by the underlying platform.

We all know what is Istio (if not, please check on their website) and it works thanks to Envoy proxy sidecar auto injection. All Istio magic is possible because all traffic flow through Envoy proxies and this opened the doors of all main Istio features:

- Traffic management

- Observability

- Security capabilities

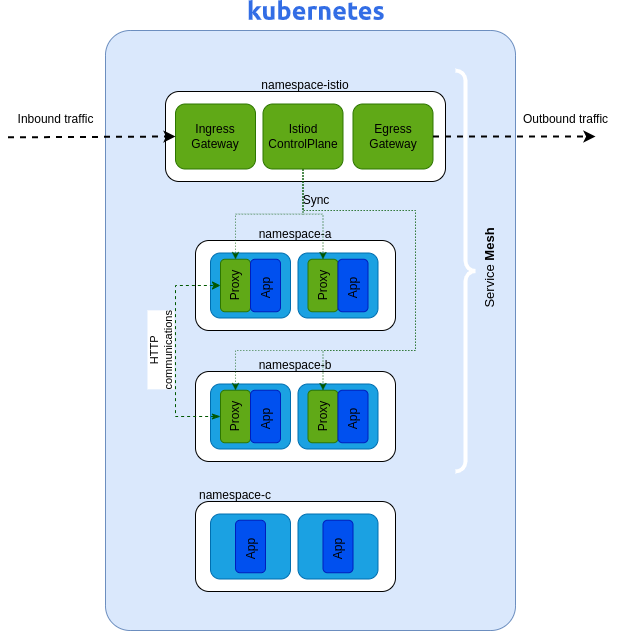

Let me give you a practical example of how it is composed the Istio architecture from my mind side.

Istio Ingress gateway

Let's keep on Ingress field. One of the Istio items is of course Ingress Gateway. It has many features but what we are interested on is that,

Istio Ingress Gateway is the same concept of Kubernetes API Gateway talked before.

So Istio Ingress Gateway is an abstraction that allow cluster operators to control inbound traffic and let http/tcp routes be defined on a below level using a dedicated Istio Custom Resource (CRD) which are nearer to the applications.

💡Of course you can configure multiple Istio Ingress gateways for managing for example multiple inbound channels (Ex. intranet and internet).

The fantastic news is that Istio has two other nice feature for users: Istio Egress Gateway and Istio service mesh.

Let's dive on it!

Istio Egress Gateway

At the other edge, through Istio Egress Gateway (if enabled) is flowing all traffic going externally. As we imagine this open the doors to many interesting features (for that you can check out official documentation).

Personally I'd say that an interesting use case is a large Kubernetes cluster on which only some dedicated nodes are allowed to go over internet. Using Istio Egress Gateway and some Kubernetes affinity tricks you are able to easily manage it.

Istio Service Mesh

Service mesh is obviously the main feature of Istio. I personally liked moving from a centralized api gateway to a distributed service mesh in order to manage cluster internal traffic.

As already described above, thanks to Envoy auto injected proxies, Istio allows to deeply customize HTTP routing. For example it allows to create HTTP header based rules, A/B test for canary release, TCP rules, etc.

In my personal opinion, Istio is a today good compromise between features and invasiveness. With invasiveness I refer to the sidecar autoinjection.

🚀🚀🚀 Obviously Istio team is already working on that,

here they talk about Ambient Mesh, an Istio installation without sidecar auto injection --> https://istio.io/latest/blog/2022/introducing-ambient-mesh/

.

..

...May the microservices be with you 🤣

![[k8s] Automatically pull images from GitLab container registry without change the tag](/content/images/size/w750/2024/01/urunner-gitlab.png)